5 Nonlinear Problems

5.1 Linear versus nonlinear equations

5.1.1 Algebraic equations

A linear, scalar, algebraic equation in \(x\) has the form \[ ax + b = 0, \] for arbitrary real constants \(a\) and \(b\). The unknown is a number \(x\). All other algebraic equations, e.g., \(x^2 + ax + b = 0\), are nonlinear. The typical feature in a nonlinear algebraic equation is that the unknown appears in products with itself, like \(x^2\) or \(e^x = 1 + x +\half x^2 + \frac{1}{3!}x^3 + \cdots\).

We know how to solve a linear algebraic equation, \(x=-b/a\), but there are no general methods for finding the exact solutions of nonlinear algebraic equations, except for very special cases (quadratic equations constitute a primary example). A nonlinear algebraic equation may have no solution, one solution, or many solutions. The tools for solving nonlinear algebraic equations are iterative methods, where we construct a series of linear equations, which we know how to solve, and hope that the solutions of the linear equations converge to a solution of the nonlinear equation we want to solve. Typical methods for nonlinear algebraic equation equations are Newton’s method, the Bisection method, and the Secant method.

5.1.2 Differential equations

The unknown in a differential equation is a function and not a number. In a linear differential equation, all terms involving the unknown function are linear in the unknown function or its derivatives. Linear here means that the unknown function, or a derivative of it, is multiplied by a number or a known function. All other differential equations are non-linear.

The easiest way to see if an equation is nonlinear, is to spot nonlinear terms where the unknown function or its derivatives are multiplied by each other. For example, in \[ u^{\prime}(t) = -a(t)u(t) + b(t), \] the terms involving the unknown function \(u\) are linear: \(u^{\prime}\) contains the derivative of the unknown function multiplied by unity, and \(au\) contains the unknown function multiplied by a known function. However, \[ u^{\prime}(t) = u(t)(1 - u(t)), \] is nonlinear because of the term \(-u^2\) where the unknown function is multiplied by itself. Also \[ \frac{\partial u}{\partial t} + u\frac{\partial u}{\partial x} = 0, \] is nonlinear because of the term \(uu_x\) where the unknown function appears in a product with its derivative. (Note here that we use different notations for derivatives: \(u^{\prime}\) or \(du/dt\) for a function \(u(t)\) of one variable, \(\frac{\partial u}{\partial t}\) or \(u_t\) for a function of more than one variable.)

Another example of a nonlinear equation is \[ u^{\prime\prime} + \sin(u) =0, \] because \(\sin(u)\) contains products of \(u\), which becomes clear if we expand the function in a Taylor series: \[ \sin(u) = u - \frac{1}{3} u^3 + \ldots \]

To really prove mathematically that some differential equation in an unknown \(u\) is linear, show for each term \(T(u)\) that with \(u = au_1 + bu_2\) for constants \(a\) and \(b\), \[ T(au_1 + bu_2) = aT(u_1) + bT(u_2)\tp \] For example, the term \(T(u) = (\sin^2 t)u'(t)\) is linear because

\[\begin{align*} T(au_1 + bu_2) &= (\sin^2 t)(au_1(t) + b u_2(t))\\ & = a(\sin^2 t)u_1(t) + b(\sin^2 t)u_2(t)\\ & =aT(u_1) + bT(u_2)\tp \end{align*}\] However, \(T(u)=\sin u\) is nonlinear because \[ T(au_1 + bu_2) = \sin (au_1 + bu_2) \neq a\sin u_1 + b\sin u_2\tp \]

5.2 A simple model problem

A series of forthcoming examples will explain how to tackle nonlinear differential equations with various techniques. We start with the (scaled) logistic equation as model problem: \[ u^{\prime}(t) = u(t)(1 - u(t)) \tp \tag{5.1}\] This is a nonlinear ordinary differential equation (ODE) which will be solved by different strategies in the following. Depending on the chosen time discretization of (5.1), the mathematical problem to be solved at every time level will either be a linear algebraic equation or a nonlinear algebraic equation. In the former case, the time discretization method transforms the nonlinear ODE into linear subproblems at each time level, and the solution is straightforward to find since linear algebraic equations are easy to solve. However, when the time discretization leads to nonlinear algebraic equations, we cannot (except in very rare cases) solve these without turning to approximate, iterative solution methods.

The next subsections introduce various methods for solving nonlinear differential equations, using (5.1) as model. We shall go through the following set of cases:

- explicit time discretization methods (with no need to solve nonlinear algebraic equations)

- implicit Backward Euler time discretization, leading to nonlinear algebraic equations solved by

- an exact analytical technique

- Picard iteration based on manual linearization

- a single Picard step

- Newton’s method

- implicit Crank-Nicolson time discretization and linearization via a geometric mean formula

Thereafter, we compare the performance of the various approaches. Despite the simplicity of (5.1), the conclusions reveal typical features of the various methods in much more complicated nonlinear PDE problems.

5.3 Linearization by explicit time discretization

Time discretization methods are divided into explicit and implicit methods. Explicit methods lead to a closed-form formula for finding new values of the unknowns, while implicit methods give a linear or nonlinear system of equations that couples (all) the unknowns at a new time level. Here we shall demonstrate that explicit methods constitute an efficient way to deal with nonlinear differential equations.

The Forward Euler method is an explicit method. When applied to (5.1), sampled at \(t=t_n\), it results in \[ \frac{u^{n+1} - u^n}{\Delta t} = u^n(1 - u^n), \] which is a linear algebraic equation for the unknown value \(u^{n+1}\) that we can easily solve: \[ u^{n+1} = u^n + \Delta t\,u^n(1 - u^n)\tp \] In this case, the nonlinearity in the original equation poses no difficulty in the discrete algebraic equation. Any other explicit scheme in time will also give only linear algebraic equations to solve. For example, a typical 2nd-order Runge-Kutta method for (5.1) leads to the following formulas:

\[\begin{align*} u^* &= u^n + \Delta t u^n(1 - u^n),\\ u^{n+1} &= u^n + \Delta t \half \left( u^n(1 - u^n) + u^*(1 - u^*)) \right)\tp \end{align*}\] The first step is linear in the unknown \(u^*\). Then \(u^*\) is known in the next step, which is linear in the unknown \(u^{n+1}\) .

5.4 Exact solution of nonlinear algebraic equations

Switching to a Backward Euler scheme for (5.1), \[ \frac{u^{n} - u^{n-1}}{\Delta t} = u^n(1 - u^n), \tag{5.2}\] results in a nonlinear algebraic equation for the unknown value \(u^n\). The equation is of quadratic type: \[ \Delta t (u^n)^2 + (1-\Delta t)u^n - u^{n-1} = 0, \] and may be solved exactly by the well-known formula for such equations. Before we do so, however, we will introduce a shorter, and often cleaner, notation for nonlinear algebraic equations at a given time level. The notation is inspired by the natural notation (i.e., variable names) used in a program, especially in more advanced partial differential equation problems. The unknown in the algebraic equation is denoted by \(u\), while \(u^{(1)}\) is the value of the unknown at the previous time level (in general, \(u^{(\ell)}\) is the value of the unknown \(\ell\) levels back in time). The notation will be frequently used in later sections. What is meant by \(u\) should be evident from the context: \(u\) may either be 1) the exact solution of the ODE/PDE problem, 2) the numerical approximation to the exact solution, or 3) the unknown solution at a certain time level.

The quadratic equation for the unknown \(u^n\) in (5.2) can, with the new notation, be written \[ F(u) = \Delta t u^2 + (1-\Delta t)u - u^{(1)} = 0\tp \tag{5.3}\] The solution is readily found to be \[ u = \frac{1}{2\Delta t} \left(-1+\Delta t \pm \sqrt{(1-\Delta t)^2 - 4\Delta t u^{(1)}}\right) \tp \tag{5.4}\]

Now we encounter a fundamental challenge with nonlinear algebraic equations: the equation may have more than one solution. How do we pick the right solution? This is in general a hard problem. In the present simple case, however, we can analyze the roots mathematically and provide an answer. The idea is to expand the roots in a series in \(\Delta t\) and truncate after the linear term since the Backward Euler scheme will introduce an error proportional to \(\Delta t\) anyway. Using sympy, we find the following Taylor series expansions of the roots:

>>> import sympy as sym

>>> dt, u_1, u = sym.symbols('dt u_1 u')

>>> r1, r2 = sym.solve(dt*u**2 + (1-dt)*u - u_1, u) # find roots

>>> r1

(dt - sqrt(dt**2 + 4*dt*u_1 - 2*dt + 1) - 1)/(2*dt)

>>> r2

(dt + sqrt(dt**2 + 4*dt*u_1 - 2*dt + 1) - 1)/(2*dt)

>>> print r1.series(dt, 0, 2) # 2 terms in dt, around dt=0

-1/dt + 1 - u_1 + dt*(u_1**2 - u_1) + O(dt**2)

>>> print r2.series(dt, 0, 2)

u_1 + dt*(-u_1**2 + u_1) + O(dt**2)We see that the r1 root, corresponding to a minus sign in front of the square root in (5.4), behaves as \(1/\Delta t\) and will therefore blow up as \(\Delta t\rightarrow 0\)! Since we know that \(u\) takes on finite values, actually it is less than or equal to 1, only the r2 root is of relevance in this case: as \(\Delta t\rightarrow 0\), \(u\rightarrow u^{(1)}\), which is the expected result.

For those who are not well experienced with approximating mathematical formulas by series expansion, an alternative method of investigation is simply to compute the limits of the two roots as \(\Delta t\rightarrow 0\) and see if a limit appears unreasonable:

>>> print r1.limit(dt, 0)

-oo

>>> print r2.limit(dt, 0)

u_15.5 Linearization

When the time integration of an ODE results in a nonlinear algebraic equation, we must normally find its solution by defining a sequence of linear equations and hope that the solutions of these linear equations converge to the desired solution of the nonlinear algebraic equation. Usually, this means solving the linear equation repeatedly in an iterative fashion. Alternatively, the nonlinear equation can sometimes be approximated by one linear equation, and consequently there is no need for iteration.

Constructing a linear equation from a nonlinear one requires linearization of each nonlinear term. This can be done manually as in Picard iteration, or fully algorithmically as in Newton’s method. Examples will best illustrate how to linearize nonlinear problems.

5.6 Picard iteration

Let us write (5.3) in a more compact form \[ F(u) = au^2 + bu + c = 0, \] with \(a=\Delta t\), \(b=1-\Delta t\), and \(c=-u^{(1)}\). Let \(u^{-}\) be an available approximation of the unknown \(u\). Then we can linearize the term \(u^2\) simply by writing \(u^{-}u\). The resulting equation, \(\hat F(u)=0\), is now linear and hence easy to solve: \[ F(u)\approx\hat F(u) = au^{-}u + bu + c = 0\tp \] Since the equation \(\hat F=0\) is only approximate, the solution \(u\) does not equal the exact solution \(\uex\) of the exact equation \(F(\uex)=0\), but we can hope that \(u\) is closer to \(\uex\) than \(u^{-}\) is, and hence it makes sense to repeat the procedure, i.e., set \(u^{-}=u\) and solve \(\hat F(u)=0\) again. There is no guarantee that \(u\) is closer to \(\uex\) than \(u^{-}\), but this approach has proven to be effective in a wide range of applications.

The idea of turning a nonlinear equation into a linear one by using an approximation \(u^{-}\) of \(u\) in nonlinear terms is a widely used approach that goes under many names: fixed-point iteration, the method of successive substitutions, nonlinear Richardson iteration, and Picard iteration. We will stick to the latter name.

Picard iteration for solving the nonlinear equation arising from the Backward Euler discretization of the logistic equation can be written as \[ u = -\frac{c}{au^{-} + b},\quad u^{-}\ \leftarrow\ u\tp \] The \(\leftarrow\) symbols means assignment (we set \(u^{-}\) equal to the value of \(u\)). The iteration is started with the value of the unknown at the previous time level: \(u^{-}=u^{(1)}\).

Some prefer an explicit iteration counter as superscript in the mathematical notation. Let \(u^k\) be the computed approximation to the solution in iteration \(k\). In iteration \(k+1\) we want to solve \[ au^k u^{k+1} + bu^{k+1} + c = 0\quad\Rightarrow\quad u^{k+1} = -\frac{c}{au^k + b},\quad k=0,1,\ldots \] Since we need to perform the iteration at every time level, the time level counter is often also included: \[ au^{n,k} u^{n,k+1} + bu^{n,k+1} - u^{n-1} = 0\quad\Rightarrow\quad u^{n,k+1} = \frac{u^n}{au^{n,k} + b},\quad k=0,1,\ldots, \] with the start value \(u^{n,0}=u^{n-1}\) and the final converged value \(u^{n}=u^{n,k}\) for sufficiently large \(k\).

However, we will normally apply a mathematical notation in our final formulas that is as close as possible to what we aim to write in a computer code and then it becomes natural to use \(u\) and \(u^{-}\) instead of \(u^{k+1}\) and \(u^k\) or \(u^{n,k+1}\) and \(u^{n,k}\).

5.6.1 Stopping criteria

The iteration method can typically be terminated when the change in the solution is smaller than a tolerance \(\epsilon_u\): \[ |u - u^{-}| \leq\epsilon_u, \] or when the residual in the equation is sufficiently small (\(< \epsilon_r\)), \[ |F(u)|= |au^2+bu + c| < \epsilon_r\tp \] ### A single Picard iteration Instead of iterating until a stopping criterion is fulfilled, one may iterate a specific number of times. Just one Picard iteration is popular as this corresponds to the intuitive idea of approximating a nonlinear term like \((u^n)^2\) by \(u^{n-1}u^n\). This follows from the linearization \(u^{-}u^n\) and the initial choice of \(u^{-}=u^{n-1}\) at time level \(t_n\). In other words, a single Picard iteration corresponds to using the solution at the previous time level to linearize nonlinear terms. The resulting discretization becomes (using proper values for \(a\), \(b\), and \(c\)) \[ \frac{u^{n} - u^{n-1}}{\Delta t} = u^n(1 - u^{n-1}), \tag{5.5}\] which is a linear algebraic equation in the unknown \(u^n\), making it easy to solve for \(u^n\) without any need for an alternative notation.

We shall later refer to the strategy of taking one Picard step, or equivalently, linearizing terms with use of the solution at the previous time step, as the Picard1 method. It is a widely used approach in science and technology, but with some limitations if \(\Delta t\) is not sufficiently small (as will be illustrated later).

correspond to a “pure” finite difference method where the equation is sampled at a point and derivatives replaced by differences (because the \(u^{n-1}\) term on the right-hand side must then be \(u^n\)). The best interpretation of the scheme (5.5) is a Backward Euler difference combined with a single (perhaps insufficient) Picard iteration at each time level, with the value at the previous time level as start for the Picard iteration.

5.7 Linearization by a geometric mean

We consider now a Crank-Nicolson discretization of (5.1). This means that the time derivative is approximated by a centered difference, \[ [D_t u = u(1-u)]^{n+\half}, \] written out as \[ \frac{u^{n+1}-u^n}{\Delta t} = u^{n+\half} - (u^{n+\half})^2\tp \tag{5.6}\] The term \(u^{n+\half}\) is normally approximated by an arithmetic mean, \[ u^{n+\half}\approx \half(u^n + u^{n+1}), \] such that the scheme involves the unknown function only at the time levels where we actually intend to compute it. The same arithmetic mean applied to the nonlinear term gives \[ (u^{n+\half})^2\approx \frac{1}{4}(u^n + u^{n+1})^2, \] which is nonlinear in the unknown \(u^{n+1}\). However, using a geometric mean for \((u^{n+\half})^2\) is a way of linearizing the nonlinear term in (5.6): \[ (u^{n+\half})^2\approx u^nu^{n+1}\tp \] Using an arithmetic mean on the linear \(u^{n+\frac{1}{2}}\) term in (5.6) and a geometric mean for the second term, results in a linearized equation for the unknown \(u^{n+1}\): \[ \frac{u^{n+1}-u^n}{\Delta t} = \half(u^n + u^{n+1}) + u^nu^{n+1}, \] which can readily be solved: \[ u^{n+1} = \frac{1 + \half\Delta t}{1+\Delta t u^n - \half\Delta t} u^n\tp \] This scheme can be coded directly, and since there is no nonlinear algebraic equation to iterate over, we skip the simplified notation with \(u\) for \(u^{n+1}\) and \(u^{(1)}\) for \(u^n\). The technique with using a geometric average is an example of transforming a nonlinear algebraic equation to a linear one, without any need for iterations.

The geometric mean approximation is often very effective for linearizing quadratic nonlinearities. Both the arithmetic and geometric mean approximations have truncation errors of order \(\Delta t^2\) and are therefore compatible with the truncation error \(\Oof{\Delta t^2}\) of the centered difference approximation for \(u^\prime\) in the Crank-Nicolson method.

Applying the operator notation for the means and finite differences, the linearized Crank-Nicolson scheme for the logistic equation can be compactly expressed as \[ [D_t u = \overline{u}^{t} + \overline{u^2}^{t,g}]^{n+\half}\tp \]

If we use an arithmetic instead of a geometric mean for the nonlinear term in (5.6), we end up with a nonlinear term \((u^{n+1})^2\). This term can be linearized as \(u^{-}u^{n+1}\) in a Picard iteration approach and in particular as \(u^nu^{n+1}\) in a Picard1 iteration approach. The latter gives a scheme almost identical to the one arising from a geometric mean (the difference in \(u^{n+1}\) being \(\frac{1}{4}\Delta t u^n(u^{n+1}-u^n)\approx \frac{1}{4}\Delta t^2 u^\prime u\), i.e., a difference of size \(\Delta t^2\)).

5.8 Newton’s method

The Backward Euler scheme (5.2) for the logistic equation leads to a nonlinear algebraic equation (5.3). Now we write any nonlinear algebraic equation in the general and compact form \[ F(u) = 0\tp \] Newton’s method linearizes this equation by approximating \(F(u)\) by its Taylor series expansion around a computed value \(u^{-}\) and keeping only the linear part:

\[\begin{align*} F(u) &= F(u^{-}) + F^{\prime}(u^{-})(u - u^{-}) + {\half}F^{\prime\prime}(u^{-})(u-u^{-})^2 +\cdots\\ & \approx F(u^{-}) + F^{\prime}(u^{-})(u - u^{-}) = \hat F(u)\tp \end{align*}\] The linear equation \(\hat F(u)=0\) has the solution \[ u = u^{-} - \frac{F(u^{-})}{F^{\prime}(u^{-})}\tp \] Expressed with an iteration index in the unknown, Newton’s method takes on the more familiar mathematical form \[ u^{k+1} = u^k - \frac{F(u^k)}{F^{\prime}(u^k)},\quad k=0,1,\ldots \] It can be shown that the error in iteration \(k+1\) of Newton’s method is proportional to the square of the error in iteration \(k\), a result referred to as quadratic convergence. This means that for small errors the method converges very fast, and in particular much faster than Picard iteration and other iteration methods. (The proof of this result is found in most textbooks on numerical analysis.) However, the quadratic convergence appears only if \(u^k\) is sufficiently close to the solution. Further away from the solution the method can easily converge very slowly or diverge. The reader is encouraged to do Exercise Section 5.38 to get a better understanding for the behavior of the method.

Application of Newton’s method to the logistic equation discretized by the Backward Euler method is straightforward as we have \[ F(u) = au^2 + bu + c,\quad a=\Delta t,\ b = 1-\Delta t,\ c=-u^{(1)}, \] and then \[ F^{\prime}(u) = 2au + b\tp \] The iteration method becomes \[ u = u^{-} + \frac{a(u^{-})^2 + bu^{-} + c}{2au^{-} + b},\quad u^{-}\ \leftarrow u\tp \tag{5.7}\] At each time level, we start the iteration by setting \(u^{-}=u^{(1)}\). Stopping criteria as listed for the Picard iteration can be used also for Newton’s method.

An alternative mathematical form, where we write out \(a\), \(b\), and \(c\), and use a time level counter \(n\) and an iteration counter \(k\), takes the form \[ u^{n,k+1} = u^{n,k} + \frac{\Delta t (u^{n,k})^2 + (1-\Delta t)u^{n,k} - u^{n-1}} {2\Delta t u^{n,k} + 1 - \Delta t},\quad u^{n,0}=u^{n-1}, \tag{5.8}\] for \(k=0,1,\ldots\). A program implementation is much closer to (5.7) than to (5.8), but the latter is better aligned with the established mathematical notation used in the literature.

5.9 Relaxation

One iteration in Newton’s method or Picard iteration consists of solving a linear problem \(\hat F(u)=0\). Sometimes convergence problems arise because the new solution \(u\) of \(\hat F(u)=0\) is “too far away” from the previously computed solution \(u^{-}\). A remedy is to introduce a relaxation, meaning that we first solve \(\hat F(u^*)=0\) for a suggested value \(u^*\) and then we take \(u\) as a weighted mean of what we had, \(u^{-}\), and what our linearized equation \(\hat F=0\) suggests, \(u^*\): \[ u = \omega u^* + (1-\omega) u^{-}\tp \] The parameter \(\omega\) is known as a relaxation parameter, and a choice \(\omega < 1\) may prevent divergent iterations.

Relaxation in Newton’s method can be directly incorporated in the basic iteration formula: \[ u = u^{-} - \omega \frac{F(u^{-})}{F^{\prime}(u^{-})}\tp \tag{5.9}\]

5.10 Implementation and experiments

The program logistic.py contains implementations of all the methods described above. Below is an extract of the file showing how the Picard and Newton methods are implemented for a Backward Euler discretization of the logistic equation.

def BE_logistic(u0, dt, Nt, choice=“Picard”, eps_r=1e-3, omega=1, max_iter=1000): if choice == “Picard1”: choice = “Picard” max_iter = 1

u = np.zeros(Nt + 1)

iterations = []

u[0] = u0

for n in range(1, Nt + 1):

a = dt

b = 1 - dt

c = -u[n - 1]

if choice in ("r1", "r2"):

r1, r2 = quadratic_roots(a, b, c)

u[n] = r1 if choice == "r1" else r2

iterations.append(0)

elif choice == "Picard":

def F(u):

return a * u**2 + b * u + c

u_ = u[n - 1]

k = 0

while abs(F(u_)) > eps_r and k < max_iter:

u_ = omega * (-c / (a * u_ + b)) + (1 - omega) * u_

k += 1

u[n] = u_

iterations.append(k)

elif choice == "Newton":

def F(u):

return a * u**2 + b * u + c

def dF(u):

return 2 * a * u + b

u_ = u[n - 1]

k = 0

while abs(F(u_)) > eps_r and k < max_iter:

u_ = u_ - F(u_) / dF(u_)

k += 1

u[n] = u_

iterations.append(k)

return u, iterations

```python

def BE_logistic(u0, dt, Nt, choice='Picard',

eps_r=1E-3, omega=1, max_iter=1000):

if choice == 'Picard1':

choice = 'Picard'

max_iter = 1

u = np.zeros(Nt+1)

iterations = []

u[0] = u0

for n in range(1, Nt+1):

a = dt

b = 1 - dt

c = -u[n-1]

if choice == 'Picard':

def F(u):

return a*u**2 + b*u + c

u_ = u[n-1]

k = 0

while abs(F(u_)) > eps_r and k < max_iter:

u_ = omega*(-c/(a*u_ + b)) + (1-omega)*u_

k += 1

u[n] = u_

iterations.append(k)

elif choice == 'Newton':

def F(u):

return a*u**2 + b*u + c

def dF(u):

return 2*a*u + b

u_ = u[n-1]

k = 0

while abs(F(u_)) > eps_r and k < max_iter:

u_ = u_ - F(u_)/dF(u_)

k += 1

u[n] = u_

iterations.append(k)

return u, iterationsThe Crank-Nicolson method utilizing a linearization based on the geometric mean gives a simpler algorithm:

def CN_logistic(u0, dt, Nt):

u = np.zeros(Nt + 1)

u[0] = u0

for n in range(0, Nt):

u[n + 1] = (1 + 0.5 * dt) / (1 + dt * u[n] - 0.5 * dt) * u[n]

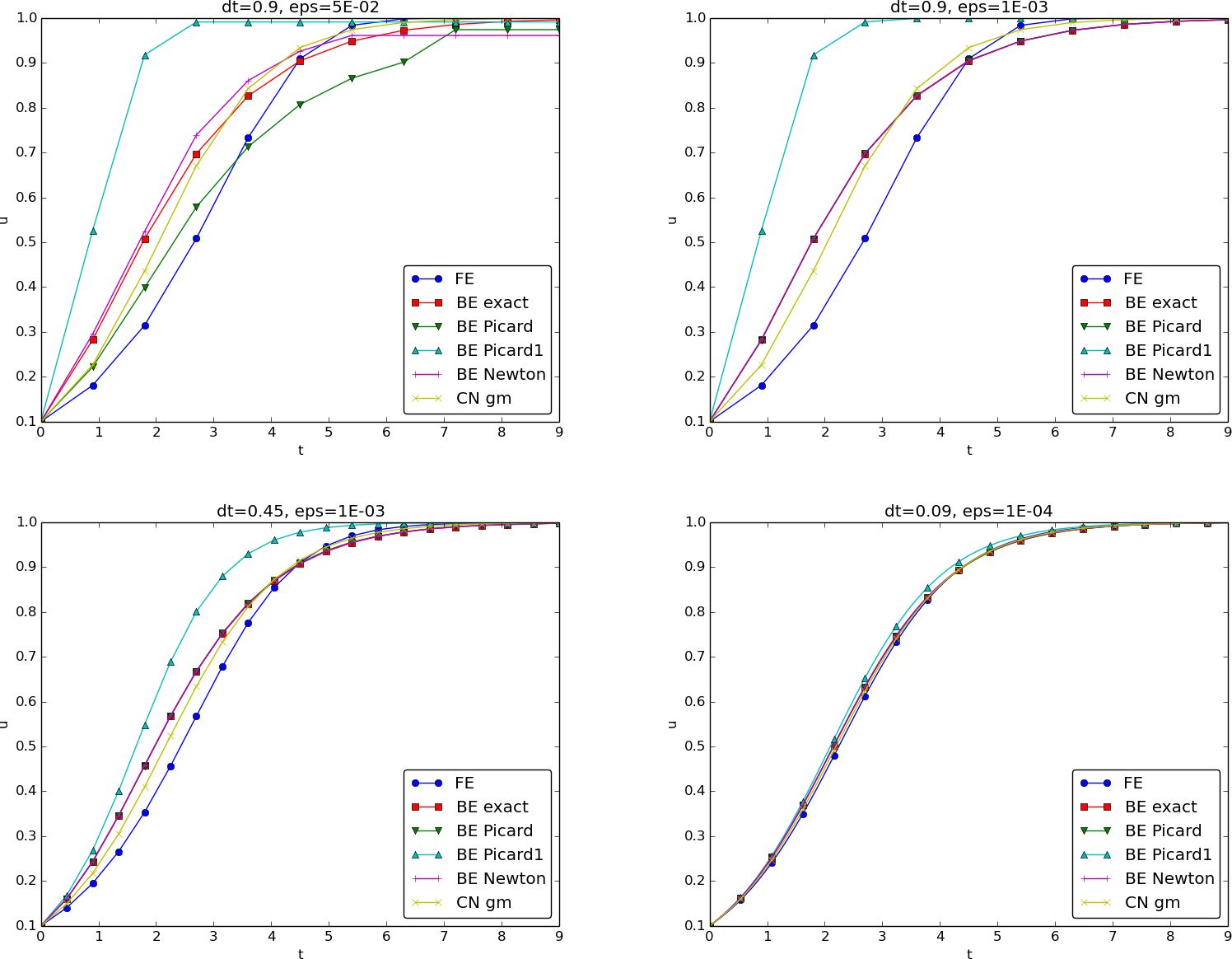

return uWe may run experiments with the model problem (5.1) and the different strategies for dealing with nonlinearities as described above. For a quite coarse time resolution, \(\Delta t=0.9\), use of a tolerance \(\epsilon_r=0.1\) in the stopping criterion introduces an iteration error, especially in the Picard iterations, that is visibly much larger than the time discretization error due to a large \(\Delta t\). This is illustrated by comparing the upper two plots in Figure Figure 5.1. The one to the right has a stricter tolerance \(\epsilon = 10^{-3}\), which causes all the curves corresponding to Picard and Newton iteration to be on top of each other (and no changes can be visually observed by reducing \(\epsilon_r\) further). The reason why Newton’s method does much better than Picard iteration in the upper left plot is that Newton’s method with one step comes far below the \(\epsilon_r\) tolerance, while the Picard iteration needs on average 7 iterations to bring the residual down to \(\epsilon_r=10^{-1}\), which gives insufficient accuracy in the solution of the nonlinear equation. It is obvious that the Picard1 method gives significant errors in addition to the time discretization unless the time step is as small as in the lower right plot.

The BE exact curve corresponds to using the exact solution of the quadratic equation at each time level, so this curve is only affected by the Backward Euler time discretization. The CN gm curve corresponds to the theoretically more accurate Crank-Nicolson discretization, combined with a geometric mean for linearization. This curve appears more accurate, especially if we take the plot in the lower right with a small \(\Delta t\) and an appropriately small \(\epsilon_r\) value as the exact curve.

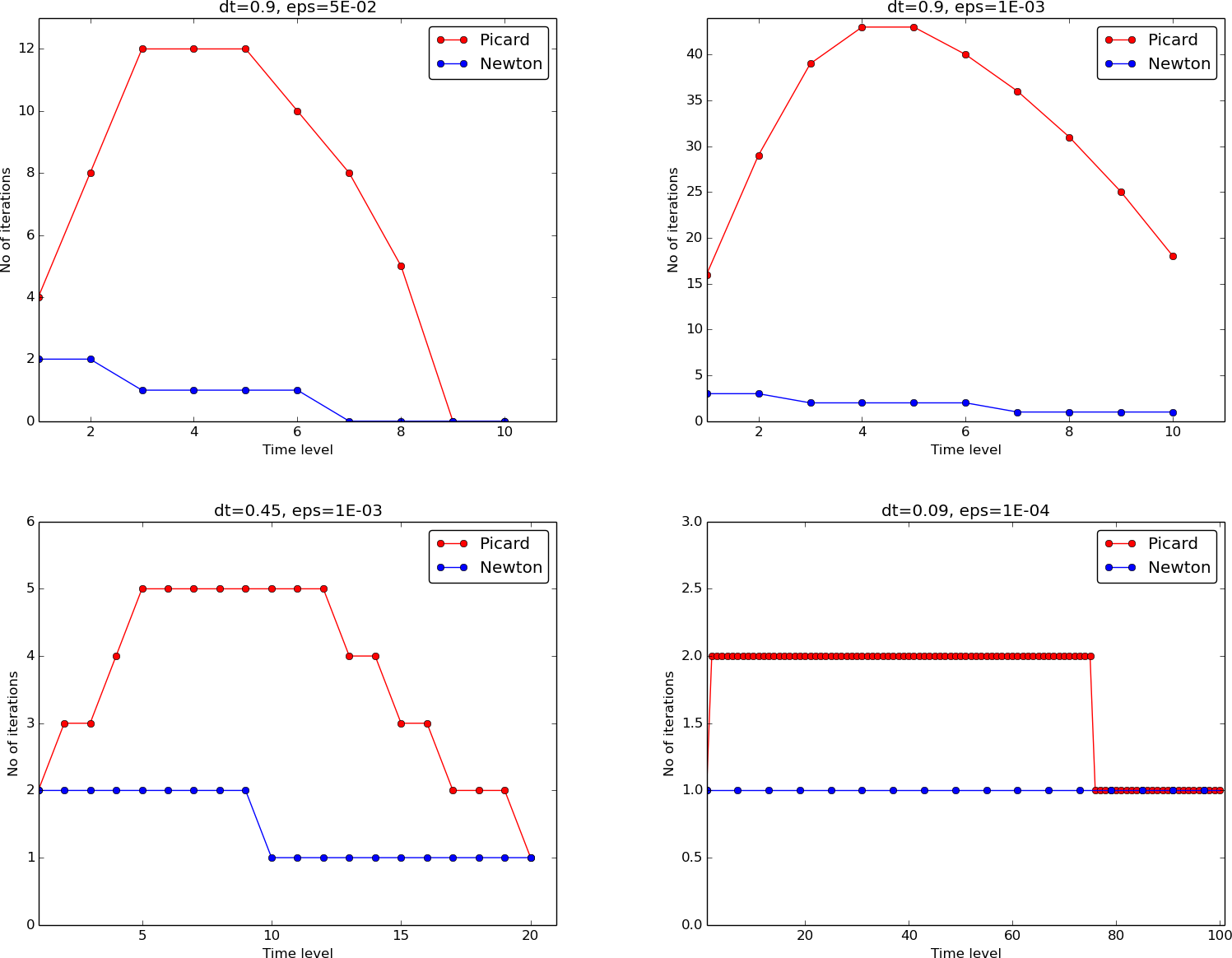

When it comes to the need for iterations, Figure Figure 5.2 displays the number of iterations required at each time level for Newton’s method and Picard iteration. The smaller \(\Delta t\) is, the better starting value we have for the iteration, and the faster the convergence is. With \(\Delta t = 0.9\) Picard iteration requires on average 32 iterations per time step, but this number is dramatically reduced as \(\Delta t\) is reduced.

However, introducing relaxation and a parameter \(\omega=0.8\) immediately reduces the average of 32 to 7, indicating that for the large \(\Delta t=0.9\), Picard iteration takes too long steps. An approximately optimal value for \(\omega\) in this case is 0.5, which results in an average of only 2 iterations! An even more dramatic impact of \(\omega\) appears when \(\Delta t = 1\): Picard iteration does not convergence in 1000 iterations, but \(\omega=0.5\) again brings the average number of iterations down to 2.

Remark. The simple Crank-Nicolson method with a geometric mean for the quadratic nonlinearity gives visually more accurate solutions than the Backward Euler discretization. Even with a tolerance of \(\epsilon_r=10^{-3}\), all the methods for treating the nonlinearities in the Backward Euler discretization give graphs that cannot be distinguished. So for accuracy in this problem, the time discretization is much more crucial than \(\epsilon_r\). Ideally, one should estimate the error in the time discretization, as the solution progresses, and set \(\epsilon_r\) accordingly.

5.11 Generalization to a general nonlinear ODE

Let us see how the various methods in the previous sections can be applied to the more generic model \[ u^{\prime} = f(u, t), \tag{5.10}\] where \(f\) is a nonlinear function of \(u\).

5.11.1 Explicit time discretization

Explicit ODE methods like the Forward Euler scheme, Runge-Kutta methods and Adams-Bashforth methods all evaluate \(f\) at time levels where \(u\) is already computed, so nonlinearities in \(f\) do not pose any difficulties.

5.11.2 Backward Euler discretization

Approximating \(u^{\prime}\) by a backward difference leads to a Backward Euler scheme, which can be written as \[ F(u^n) = u^{n} - \Delta t\, f(u^n, t_n) - u^{n-1}=0, \] or alternatively \[ F(u) = u - \Delta t\, f(u, t_n) - u^{(1)} = 0\tp \] A simple Picard iteration, not knowing anything about the nonlinear structure of \(f\), must approximate \(f(u,t_n)\) by \(f(u^{-},t_n)\): \[ \hat F(u) = u - \Delta t\, f(u^{-},t_n) - u^{(1)}\tp \] The iteration starts with \(u^{-}=u^{(1)}\) and proceeds with repeating \[ u^* = \Delta t\, f(u^{-},t_n) + u^{(1)},\quad u = \omega u^* + (1-\omega)u^{-}, \quad u^{-}\ \leftarrow\ u, \] until a stopping criterion is fulfilled.

Evaluating \(f\) for a known \(u^{-}\) is referred to as explicit treatment of \(f\), while if \(f(u,t)\) has some structure, say \(f(u,t) = u^3\), parts of \(f\) can involve the unknown \(u\), as in the manual linearization \((u^{-})^2u\), and then the treatment of \(f\) is “more implicit” and “less explicit”. This terminology is inspired by time discretization of \(u^{\prime}=f(u,t)\), where evaluating \(f\) for known \(u\) values gives explicit schemes, while treating \(f\) or parts of \(f\) implicitly, makes \(f\) contribute to the unknown terms in the equation at the new time level.

Explicit treatment of \(f\) usually means stricter conditions on \(\Delta t\) to achieve stability of time discretization schemes. The same applies to iteration techniques for nonlinear algebraic equations: the “less” we linearize \(f\) (i.e., the more we keep of \(u\) in the original formula), the faster the convergence may be.

We may say that \(f(u,t)=u^3\) is treated explicitly if we evaluate \(f\) as \((u^{-})^3\), partially implicit if we linearize as \((u^{-})^2u\) and fully implicit if we represent \(f\) by \(u^3\). (Of course, the fully implicit representation will require further linearization, but with \(f(u,t)=u^2\) a fully implicit treatment is possible if the resulting quadratic equation is solved with a formula.)

For the ODE \(u^{\prime}=-u^3\) with \(f(u,t)=-u^3\) and coarse time resolution \(\Delta t = 0.4\), Picard iteration with \((u^{-})^2u\) requires 8 iterations with \(\epsilon_r = 10^{-3}\) for the first time step, while \((u^{-})^3\) leads to 22 iterations. After about 10 time steps both approaches are down to about 2 iterations per time step, but this example shows a potential of treating \(f\) more implicitly.

A trick to treat \(f\) implicitly in Picard iteration is to evaluate it as \(f(u^{-},t)u/u^{-}\). For a polynomial \(f\), \(f(u,t)=u^m\), this corresponds to \((u^{-})^{m}u/u^{-}=(u^{-})^{m-1}u\). Sometimes this more implicit treatment has no effect, as with \(f(u,t)=\exp(-u)\) and \(f(u,t)=\ln (1+u)\), but with \(f(u,t)=\sin(2(u+1))\), the \(f(u^{-},t)u/u^{-}\) trick leads to 7, 9, and 11 iterations during the first three steps, while \(f(u^{-},t)\) demands 17, 21, and 20 iterations. (Experiments can be done with the code ODE_Picard_tricks.py.)

Newton’s method applied to a Backward Euler discretization of \(u^{\prime}=f(u,t)\) requires computation of the derivative \[ F^{\prime}(u) = 1 - \Delta t\frac{\partial f}{\partial u}(u,t_n)\tp \] Starting with the solution at the previous time level, \(u^{-}=u^{(1)}\), we can just use the standard formula \[ u = u^{-} - \omega \frac{F(u^{-})}{F^{\prime}(u^{-})} = u^{-} - \omega \frac{u^{-} - \Delta t\, f(u^{-}, t_n) - u^{(1)}}{1 - \Delta t \frac{\partial}{\partial u}f(u^{-},t_n)} \tp \tag{5.11}\]

5.11.3 Crank-Nicolson discretization

The standard Crank-Nicolson scheme with arithmetic mean approximation of \(f\) takes the form \[ \frac{u^{n+1} - u^n}{\Delta t} = \half(f(u^{n+1}, t_{n+1}) + f(u^n, t_n))\tp \] We can write the scheme as a nonlinear algebraic equation \[ F(u) = u - u^{(1)} - \Delta t{\half}f(u,t_{n+1}) - \Delta t{\half}f(u^{(1)},t_{n}) = 0\tp \tag{5.12}\] A Picard iteration scheme must in general employ the linearization \[ \hat F(u) = u - u^{(1)} - \Delta t{\half}f(u^{-},t_{n+1}) - \Delta t{\half}f(u^{(1)},t_{n}), \] while Newton’s method can apply the general formula (5.11) with \(F(u)\) given in (5.12) and \[ F^{\prime}(u)= 1 - \half\Delta t\frac{\partial f}{\partial u}(u,t_{n+1})\tp \]

5.12 Systems of ODEs

We may write a system of ODEs

\[\begin{align*} \frac{d}{dt}u_0(t) &= f_0(u_0(t),u_1(t),\ldots,u_N(t),t),\\ \frac{d}{dt}u_1(t) &= f_1(u_0(t),u_1(t),\ldots,u_N(t),t),\\ &\vdots\\ \frac{d}{dt}u_m(t) &= f_m(u_0(t),u_1(t),\ldots,u_N(t),t), \end{align*}\] as \[ u^{\prime} = f(u,t),\quad u(0)=U_0, \] if we interpret \(u\) as a vector \(u=(u_0(t),u_1(t),\ldots,u_N(t))\) and \(f\) as a vector function with components \((f_0(u,t),f_1(u,t),\ldots,f_N(u,t))\).

Most solution methods for scalar ODEs, including the Forward and Backward Euler schemes and the Crank-Nicolson method, generalize in a straightforward way to systems of ODEs simply by using vector arithmetics instead of scalar arithmetics, which corresponds to applying the scalar scheme to each component of the system. For example, here is a backward difference scheme applied to each component,

\[\begin{align*} \frac{u_0^n- u_0^{n-1}}{\Delta t} &= f_0(u^n,t_n),\\ \frac{u_1^n- u_1^{n-1}}{\Delta t} &= f_1(u^n,t_n),\\ &\vdots\\ \frac{u_N^n- u_N^{n-1}}{\Delta t} &= f_N(u^n,t_n), \end{align*}\] which can be written more compactly in vector form as \[ \frac{u^n- u^{n-1}}{\Delta t} = f(u^n,t_n)\tp \] This is a system of algebraic equations, \[ u^n - \Delta t\,f(u^n,t_n) - u^{n-1}=0, \] or written out

\[\begin{align*} u_0^n - \Delta t\, f_0(u^n,t_n) - u_0^{n-1} &= 0,\\ &\vdots\\ u_N^n - \Delta t\, f_N(u^n,t_n) - u_N^{n-1} &= 0\tp \end{align*}\]

5.12.1 Example

We shall address the \(2\times 2\) ODE system for oscillations of a pendulum subject to gravity and air drag. The system can be written as

\[\begin{align} \dot\omega &= -\sin\theta -\beta \omega |\omega|,\\ \dot\theta &= \omega, \end{align}\] where \(\beta\) is a dimensionless parameter (this is the scaled, dimensionless version of the original, physical model). The unknown components of the system are the angle \(\theta(t)\) and the angular velocity \(\omega(t)\). We introduce \(u_0=\omega\) and \(u_1=\theta\), which leads to

\[\begin{align*} u_0^{\prime} = f_0(u,t) &= -\sin u_1 - \beta u_0|u_0|,\\ u_1^{\prime} = f_1(u,t) &= u_0\tp \end{align*}\] A Crank-Nicolson scheme reads

\[\begin{align} \frac{u_0^{n+1}-u_0^{n}}{\Delta t} &= -\sin u_1^{n+\frac{1}{2}} - \beta u_0^{n+\frac{1}{2}}|u_0^{n+\frac{1}{2}}|\nonumber\\ & \approx -\sin\left(\frac{1}{2}(u_1^{n+1} + u_1n)\right) - \beta\frac{1}{4} (u_0^{n+1} + u_0^n)|u_0^{n+1}+u_0^n|,\\ \frac{u_1^{n+1}-u_1^n}{\Delta t} &= u_0^{n+\frac{1}{2}}\approx \frac{1}{2} (u_0^{n+1}+u_0^n)\tp \end{align}\] This is a coupled system of two nonlinear algebraic equations in two unknowns \(u_0^{n+1}\) and \(u_1^{n+1}\).

Using the notation \(u_0\) and \(u_1\) for the unknowns \(u_0^{n+1}\) and \(u_1^{n+1}\) in this system, writing \(u_0^{(1)}\) and \(u_1^{(1)}\) for the previous values \(u_0^n\) and \(u_1^n\), multiplying by \(\Delta t\) and moving the terms to the left-hand sides, gives

\[ u_0 - u_0^{(1)} + \Delta t\,\sin\left(\frac{1}{2}(u_1 + u_1^{(1)})\right) + \frac{1}{4}\Delta t\beta (u_0 + u_0^{(1)})|u_0 + u_0^{(1)}| =0, \tag{5.13}\] \[ u_1 - u_1^{(1)} -\frac{1}{2}\Delta t(u_0 + u_0^{(1)}) =0\tp \tag{5.14}\] Obviously, we have a need for solving systems of nonlinear algebraic equations, which is the topic of the next section.

5.13 Systems of nonlinear algebraic equations

Implicit time discretization methods for a system of ODEs, or a PDE, lead to systems of nonlinear algebraic equations, written compactly as \[ F(u) = 0, \] where \(u\) is a vector of unknowns \(u=(u_0,\ldots,u_N)\), and \(F\) is a vector function: \(F=(F_0,\ldots,F_N)\). The system at the end of Section Section 5.12 fits this notation with \(N=1\), \(F_0(u)\) given by the left-hand side of (5.13), while \(F_1(u)\) is the left-hand side of (5.14).

Sometimes the equation system has a special structure because of the underlying problem, e.g., \[ A(u)u = b(u), \] with \(A(u)\) as an \((N+1)\times (N+1)\) matrix function of \(u\) and \(b\) as a vector function: \(b=(b_0,\ldots,b_N)\).

We shall next explain how Picard iteration and Newton’s method can be applied to systems like \(F(u)=0\) and \(A(u)u=b(u)\). The exposition has a focus on ideas and practical computations. More theoretical considerations, including quite general results on convergence properties of these methods, can be found in Kelley (Kelley 1995).

5.14 Picard iteration

We cannot apply Picard iteration to nonlinear equations unless there is some special structure. For the commonly arising case \(A(u)u=b(u)\) we can linearize the product \(A(u)u\) to \(A(u^{-})u\) and \(b(u)\) as \(b(u^{-})\). That is, we use the most previously computed approximation in \(A\) and \(b\) to arrive at a linear system for \(u\): \[ A(u^{-})u = b(u^{-})\tp \] A relaxed iteration takes the form \[ A(u^{-})u^* = b(u^{-}),\quad u = \omega u^* + (1-\omega)u^{-}\tp \] In other words, we solve a system of nonlinear algebraic equations as a sequence of linear systems.

Given \(A(u)u=b(u)\) and an initial guess \(u^{-}\), iterate until convergence:

- solve \(A(u^{-})u^* = b(u^{-})\) with respect to \(u^*\)

- \(u = \omega u^* + (1-\omega) u^{-}\)

- \(u^{-}\ \leftarrow\ u\)

“Until convergence” means that the iteration is stopped when the change in the unknown, \(||u - u^{-}||\), or the residual \(||A(u)u-b||\), is sufficiently small, see Section Section 5.16 for more details.

5.15 Newton’s method

The natural starting point for Newton’s method is the general nonlinear vector equation \(F(u)=0\). As for a scalar equation, the idea is to approximate \(F\) around a known value \(u^{-}\) by a linear function \(\hat F\), calculated from the first two terms of a Taylor expansion of \(F\). In the multi-variate case these two terms become \[ F(u^{-}) + J(u^{-}) \cdot (u - u^{-}), \] where \(J\) is the Jacobian of \(F\), defined by \[ J_{i,j} = \frac{\partial F_i}{\partial u_j}\tp \] So, the original nonlinear system is approximated by \[ \hat F(u) = F(u^{-}) + J(u^{-}) \cdot (u - u^{-})=0, \] which is linear in \(u\) and can be solved in a two-step procedure: first solve \(J\delta u = -F(u^{-})\) with respect to the vector \(\delta u\) and then update \(u = u^{-} + \delta u\). A relaxation parameter can easily be incorporated: \[ u = \omega(u^{-} +\delta u) + (1-\omega)u^{-} = u^{-} + \omega\delta u\tp \]

Given \(F(u)=0\) and an initial guess \(u^{-}\), iterate until convergence:

- solve \(J\delta u = -F(u^{-})\) with respect to \(\delta u\)

- \(u = u^{-} + \omega\delta u\)

- \(u^{-}\ \leftarrow\ u\)

For the special system with structure \(A(u)u=b(u)\), \[ F_i = \sum_k A_{i,k}(u)u_k - b_i(u), \] one gets \[ J_{i,j} = \sum_k \frac{\partial A_{i,k}}{\partial u_j}u_k + A_{i,j} - \frac{\partial b_i}{\partial u_j}\tp \] We realize that the Jacobian needed in Newton’s method consists of \(A(u^{-})\) as in the Picard iteration plus two additional terms arising from the differentiation. Using the notation \(A^{\prime}(u)\) for \(\partial A/\partial u\) (a quantity with three indices: \(\partial A_{i,k}/\partial u_j\)), and \(b^{\prime}(u)\) for \(\partial b/\partial u\) (a quantity with two indices: \(\partial b_i/\partial u_j\)), we can write the linear system to be solved as \[ (A + A^{\prime}u + b^{\prime})\delta u = -Au + b, \] or \[ (A(u^{-}) + A^{\prime}(u^{-})u^{-} + b^{\prime}(u^{-}))\delta u = -A(u^{-})u^{-} + b(u^{-})\tp \] Rearranging the terms demonstrates the difference from the system solved in each Picard iteration: \[ \underbrace{A(u^{-})(u^{-}+\delta u) - b(u^{-})}_{\hbox{Picard system}} +\, \gamma (A^{\prime}(u^{-})u^{-} + b^{\prime}(u^{-}))\delta u = 0\tp \] Here we have inserted a parameter \(\gamma\) such that \(\gamma=0\) gives the Picard system and \(\gamma=1\) gives the Newton system. Such a parameter can be handy in software to easily switch between the methods.

Given \(A(u)\), \(b(u)\), and an initial guess \(u^{-}\), iterate until convergence:

- solve \((A + \gamma(A^{\prime}(u^{-})u^{-} + b^{\prime}(u^{-})))\delta u = -A(u^{-})u^{-} + b(u^{-})\) with respect to \(\delta u\)

- \(u = u^{-} + \omega\delta u\)

- \(u^{-}\ \leftarrow\ u\)

\(\gamma =1\) gives a Newton method while \(\gamma =0\) corresponds to Picard iteration.

5.16 Stopping criteria

Let \(||\cdot||\) be the standard Euclidean vector norm. Four termination criteria are much in use:

- Absolute change in solution: \(||u - u^{-}||\leq \epsilon_u\)

- Relative change in solution: \(||u - u^{-}||\leq \epsilon_u ||u_0||\), where \(u_0\) denotes the start value of \(u^{-}\) in the iteration

- Absolute residual: \(||F(u)|| \leq \epsilon_r\)

- Relative residual: \(||F(u)|| \leq \epsilon_r ||F(u_0)||\)

To prevent divergent iterations to run forever, one terminates the iterations when the current number of iterations \(k\) exceeds a maximum value \(k_{\max}\).

The relative criteria are most used since they are not sensitive to the characteristic size of \(u\). Nevertheless, the relative criteria can be misleading when the initial start value for the iteration is very close to the solution, since an unnecessary reduction in the error measure is enforced. In such cases the absolute criteria work better. It is common to combine the absolute and relative measures of the size of the residual, as in \[ ||F(u)|| \leq \epsilon_{rr} ||F(u_0)|| + \epsilon_{ra}, \] where \(\epsilon_{rr}\) is the tolerance in the relative criterion and \(\epsilon_{ra}\) is the tolerance in the absolute criterion. With a very good initial guess for the iteration (typically the solution of a differential equation at the previous time level), the term \(||F(u_0)||\) is small and \(\epsilon_{ra}\) is the dominating tolerance. Otherwise, \(\epsilon_{rr} ||F(u_0)||\) and the relative criterion dominates.

With the change in solution as criterion we can formulate a combined absolute and relative measure of the change in the solution: \[ ||\delta u|| \leq \epsilon_{ur} ||u_0|| + \epsilon_{ua}, \] The ultimate termination criterion, combining the residual and the change in solution with a test on the maximum number of iterations, can be expressed as \[ ||F(u)|| \leq \epsilon_{rr} ||F(u_0)|| + \epsilon_{ra} \quad\hbox{or}\quad ||\delta u|| \leq \epsilon_{ur} ||u_0|| + \epsilon_{ua} \quad\hbox{or}\quad k>k_{\max}\tp \] ## Example: A nonlinear ODE model from epidemiology {#sec-nonlin-systems-alg-SI}

A very simple model for the spreading of a disease, such as a flu, takes the form of a \(2\times 2\) ODE system

\[\begin{align} S^{\prime} &= -\beta SI,\\ I^{\prime} &= \beta SI - \nu I, \end{align}\] where \(S(t)\) is the number of people who can get ill (susceptibles) and \(I(t)\) is the number of people who are ill (infected). The constants \(\beta >0\) and \(\nu >0\) must be given along with initial conditions \(S(0)\) and \(I(0)\).

5.16.1 Implicit time discretization

A Crank-Nicolson scheme leads to a \(2\times 2\) system of nonlinear algebraic equations in the unknowns \(S^{n+1}\) and \(I^{n+1}\):

\[\begin{align} \frac{S^{n+1}-S^n}{\Delta t} &= -\beta [SI]^{n+\half} \approx -\frac{\beta}{2}(S^nI^n + S^{n+1}I^{n+1}),\\ \frac{I^{n+1}-I^n}{\Delta t} &= \beta [SI]^{n+\half} - \nu I^{n+\half} \approx \frac{\beta}{2}(S^nI^n + S^{n+1}I^{n+1}) - \frac{\nu}{2}(I^n + I^{n+1})\tp \end{align}\] Introducing \(S\) for \(S^{n+1}\), \(S^{(1)}\) for \(S^n\), \(I\) for \(I^{n+1}\) and \(I^{(1)}\) for \(I^n\), we can rewrite the system as

\[ F_S(S,I) = S - S^{(1)} + \half\Delta t\beta(S^{(1)}I^{(1)} + SI) = 0, \tag{5.15}\] \[ F_I(S,I) = I - I^{(1)} - \half\Delta t\beta(S^{(1)}I^{(1)} + SI) + \half\Delta t\nu(I^{(1)} + I) =0\tp \tag{5.16}\]

5.16.2 A Picard iteration

We assume that we have approximations \(S^{-}\) and \(I^{-}\) to \(S\) and \(I\), respectively. A way of linearizing the only nonlinear term \(SI\) is to write \(I^{-}S\) in the \(F_S=0\) equation and \(S^{-}I\) in the \(F_I=0\) equation, which also decouples the equations. Solving the resulting linear equations with respect to the unknowns \(S\) and \(I\) gives

\[\begin{align*} S &= \frac{S^{(1)} - \half\Delta t\beta S^{(1)}I^{(1)}} {1 + \half\Delta t\beta I^{-}}, \\ I &= \frac{I^{(1)} + \half\Delta t\beta S^{(1)}I^{(1)} - \half\Delta t\nu I^{(1)}} {1 - \half\Delta t\beta S^{-} + \half\Delta t \nu}\tp \end{align*}\] Before a new iteration, we must update \(S^{-}\ \leftarrow\ S\) and \(I^{-}\ \leftarrow\ I\).

5.16.3 Newton’s method

The nonlinear system (5.15)-(5.16) can be written as \(F(u)=0\) with \(F=(F_S,F_I)\) and \(u=(S,I)\). The Jacobian becomes \[ J = \left(\begin{array}{cc} \frac{\partial}{\partial S} F_S & \frac{\partial}{\partial I}F_S\\ \frac{\partial}{\partial S} F_I & \frac{\partial}{\partial I}F_I \end{array}\right) = \left(\begin{array}{cc} 1 + \half\Delta t\beta I & \half\Delta t\beta S\\ + \half\Delta t\beta I & 1 - \half\Delta t\beta S + \half\Delta t\nu \end{array}\right) \tp \] The Newton system \(J(u^{-})\delta u = -F(u^{-})\) to be solved in each iteration is then

\[\begin{align*} & \left(\begin{array}{cc} 1 + \half\Delta t\beta I^{-} & \half\Delta t\beta S^{-}\\ - \half\Delta t\beta I^{-} & 1 - \half\Delta t\beta S^{-} + \half\Delta t\nu \end{array}\right) \left(\begin{array}{c} \delta S\\ \delta I \end{array}\right) =\\ & \qquad\qquad \left(\begin{array}{c} S^{-} - S^{(1)} + \half\Delta t\beta(S^{(1)}I^{(1)} + S^{-}I^{-})\\ I^{-} - I^{(1)} - \half\Delta t\beta(S^{(1)}I^{(1)} + S^{-}I^{-}) + \half\Delta t\nu(I^{(1)} + I^{-}) \end{array}\right) \end{align*}\]

Remark. For this particular system of ODEs, explicit time integration methods work very well. Even a Forward Euler scheme is fine, but (as also experienced more generally) the 4-th order Runge-Kutta method is an excellent balance between high accuracy, high efficiency, and simplicity.

5.17 Nonlinear diffusion model

The attention is now turned to nonlinear partial differential equations (PDEs) and application of the techniques explained above for ODEs. The model problem is a nonlinear diffusion equation for \(u(\x,t)\):

\[\begin{alignat}{2} \frac{\partial u}{\partial t} &= \nabla\cdot (\dfc(u)\nabla u) + f(u),\quad &\x\in\Omega,\ t\in (0,T], \\ -\dfc(u)\frac{\partial u}{\partial n} &= g,\quad &\x\in\partial\Omega_N,\ t\in (0,T], \\ u &= u_0,\quad &\x\in\partial\Omega_D,\ t\in (0,T]\tp \end{alignat}\]In the present section, our aim is to discretize this problem in time and then present techniques for linearizing the time-discrete PDE problem “at the PDE level” such that we transform the nonlinear stationary PDE problem at each time level into a sequence of linear PDE problems, which can be solved using any method for linear PDEs. This strategy avoids the solution of systems of nonlinear algebraic equations. In Section Section 5.22 we shall take the opposite (and more common) approach: discretize the nonlinear problem in time and space first, and then solve the resulting nonlinear algebraic equations at each time level by the methods of Section Section 5.13. Very often, the two approaches are mathematically identical, so there is no preference from a computational efficiency point of view. The details of the ideas sketched above will hopefully become clear through the forthcoming examples.

5.18 Explicit time integration

The nonlinearities in the PDE are trivial to deal with if we choose an explicit time integration method for the nonlinear diffusion equation, such as the Forward Euler method: \[ [D_t^+ u = \nabla\cdot (\dfc(u)\nabla u) + f(u)]^n, \] or written out, \[ \frac{u^{n+1} - u^n}{\Delta t} = \nabla\cdot (\dfc(u^n)\nabla u^n) + f(u^n), \] which is a linear equation in the unknown \(u^{n+1}\) with solution \[ u^{n+1} = u^n + \Delta t\nabla\cdot (\dfc(u^n)\nabla u^n) + \Delta t f(u^n)\tp \] The disadvantage with this discretization is the strict stability criterion \(\Delta t \leq h^2/(6\max\alpha)\) for the case \(f=0\) and a standard 2nd-order finite difference discretization in 3D space with mesh cell sizes \(h=\Delta x=\Delta y=\Delta z\).

5.19 Backward Euler scheme and Picard iteration

A Backward Euler scheme for the nonlinear diffusion equation reads \[ [D_t^- u = \nabla\cdot (\dfc(u)\nabla u) + f(u)]^n\tp \] Written out, \[ \frac{u^{n} - u^{n-1}}{\Delta t} = \nabla\cdot (\dfc(u^n)\nabla u^n) + f(u^n)\tp \tag{5.17}\] This is a nonlinear PDE for the unknown function \(u^n(\x)\). Such a PDE can be viewed as a time-independent PDE where \(u^{n-1}(\x)\) is a known function.

We introduce a Picard iteration with \(k\) as iteration counter. A typical linearization of the \(\nabla\cdot(\dfc(u^n)\nabla u^n)\) term in iteration \(k+1\) is to use the previously computed \(u^{n,k}\) approximation in the diffusion coefficient: \(\dfc(u^{n,k})\). The nonlinear source term is treated similarly: \(f(u^{n,k})\). The unknown function \(u^{n,k+1}\) then fulfills the linear PDE \[ \frac{u^{n,k+1} - u^{n-1}}{\Delta t} = \nabla\cdot (\dfc(u^{n,k}) \nabla u^{n,k+1}) + f(u^{n,k})\tp \tag{5.18}\] The initial guess for the Picard iteration at this time level can be taken as the solution at the previous time level: \(u^{n,0}=u^{n-1}\).

We can alternatively apply the implementation-friendly notation where \(u\) corresponds to the unknown we want to solve for, i.e., \(u^{n,k+1}\) above, and \(u^{-}\) is the most recently computed value, \(u^{n,k}\) above. Moreover, \(u^{(1)}\) denotes the unknown function at the previous time level, \(u^{n-1}\) above. The PDE to be solved in a Picard iteration then looks like \[ \frac{u - u^{(1)}}{\Delta t} = \nabla\cdot (\dfc(u^{-}) \nabla u) + f(u^{-})\tp \tag{5.19}\] At the beginning of the iteration we start with the value from the previous time level: \(u^{-}=u^{(1)}\), and after each iteration, \(u^{-}\) is updated to \(u\).

The previous derivations of the numerical scheme for time discretizations of PDEs have, strictly speaking, a somewhat sloppy notation, but it is much used and convenient to read. A more precise notation must distinguish clearly between the exact solution of the PDE problem, here denoted \(\uex(\x,t)\), and the exact solution of the spatial problem, arising after time discretization at each time level, where (5.17) is an example. The latter is here represented as \(u^n(\x)\) and is an approximation to \(\uex(\x,t_n)\). Then we have another approximation \(u^{n,k}(\x)\) to \(u^n(\x)\) when solving the nonlinear PDE problem for \(u^n\) by iteration methods, as in (5.18).

In our notation, \(u\) is a synonym for \(u^{n,k+1}\) and \(u^{(1)}\) is a synonym for \(u^{n-1}\), inspired by what are natural variable names in a code. We will usually state the PDE problem in terms of \(u\) and quickly redefine the symbol \(u\) to mean the numerical approximation, while \(\uex\) is not explicitly introduced unless we need to talk about the exact solution and the approximate solution at the same time.

5.20 Backward Euler scheme and Newton’s method

At time level \(n\), we have to solve the stationary PDE (5.17). In the previous section, we saw how this can be done with Picard iterations. Another alternative is to apply the idea of Newton’s method in a clever way. Normally, Newton’s method is defined for systems of algebraic equations, but the idea of the method can be applied at the PDE level too.

5.20.1 Linearization via Taylor expansions

Let \(u^{n,k}\) be an approximation to the unknown \(u^n\). We seek a better approximation on the form \[ u^{n} = u^{n,k} + \delta u\tp \tag{5.20}\] The idea is to insert (5.20) in (5.17), Taylor expand the nonlinearities and keep only the terms that are linear in \(\delta u\) (which makes (5.20) an approximation for \(u^{n}\)). Then we can solve a linear PDE for the correction \(\delta u\) and use (5.20) to find a new approximation \[ u^{n,k+1}=u^{n,k}+\delta u \] to \(u^{n}\). Repeating this procedure gives a sequence \(u^{n,k+1}\), \(k=0,1,\ldots\) that hopefully converges to the goal \(u^n\).

Let us carry out all the mathematical details for the nonlinear diffusion PDE discretized by the Backward Euler method. Inserting (5.20) in (5.17) gives \[ \frac{u^{n,k} +\delta u - u^{n-1}}{\Delta t} = \nabla\cdot (\dfc(u^{n,k} + \delta u)\nabla (u^{n,k}+\delta u)) + f(u^{n,k}+\delta u)\tp \tag{5.21}\] We can Taylor expand \(\dfc(u^{n,k} + \delta u)\) and \(f(u^{n,k}+\delta u)\):

\[\begin{align*} \dfc(u^{n,k} + \delta u) & = \dfc(u^{n,k}) + \frac{d\dfc}{du}(u^{n,k}) \delta u + \Oof{\delta u^2}\approx \dfc(u^{n,k}) + \dfc^{\prime}(u^{n,k})\delta u,\\ f(u^{n,k}+\delta u) &= f(u^{n,k}) + \frac{df}{du}(u^{n,k})\delta u + \Oof{\delta u^2}\approx f(u^{n,k}) + f^{\prime}(u^{n,k})\delta u\tp \end{align*}\] Inserting the linear approximations of \(\dfc\) and \(f\) in (5.21) results in

\[ \begin{split} \frac{u^{n,k} +\delta u - u^{n-1}}{\Delta t} &= \nabla\cdot (\dfc(u^{n,k})\nabla u^{n,k}) + f(u^{n,k}) + \\ &\qquad \nabla\cdot (\dfc(u^{n,k})\nabla \delta u) + \nabla\cdot (\dfc^{\prime}(u^{n,k})\delta u\nabla u^{n,k}) + \\ &\qquad \nabla\cdot (\dfc^{\prime}(u^{n,k})\delta u\nabla \delta u) + f^{\prime}(u^{n,k})\delta u\tp \end{split} \tag{5.22}\] The term \(\dfc^{\prime}(u^{n,k})\delta u\nabla \delta u\) is of order \(\delta u^2\) and therefore omitted since we expect the correction \(\delta u\) to be small (\(\delta u \gg \delta u^2\)). Reorganizing the equation gives a PDE for \(\delta u\) that we can write in short form as \[ \delta F(\delta u; u^{n,k}) = -F(u^{n,k}), \] where

\[ F(u^{n,k}) = \frac{u^{n,k} - u^{n-1}}{\Delta t} - \nabla\cdot (\dfc(u^{n,k})\nabla u^{n,k}) + f(u^{n,k}), \] \[ \begin{split} \delta F(\delta u; u^{n,k}) &= - \frac{1}{\Delta t}\delta u + \nabla\cdot (\dfc(u^{n,k})\nabla \delta u) + \\ &\quad \nabla\cdot (\dfc^{\prime}(u^{n,k})\delta u\nabla u^{n,k}) + f^{\prime}(u^{n,k})\delta u\tp \end{split} \tag{5.23}\] Note that \(\delta F\) is a linear function of \(\delta u\), and \(F\) contains only terms that are known, such that the PDE for \(\delta u\) is indeed linear.

The notational form \(\delta F = -F\) resembles the Newton system \(J\delta u =-F\) for systems of algebraic equations, with \(\delta F\) as \(J\delta u\). The unknown vector in a linear system of algebraic equations enters the system as a linear operator in terms of a matrix-vector product (\(J\delta u\)), while at the PDE level we have a linear differential operator instead (\(\delta F\)).

5.20.2 Similarity with Picard iteration

We can rewrite the PDE for \(\delta u\) in a slightly different way too if we define \(u^{n,k} + \delta u\) as \(u^{n,k+1}\).

\[\begin{align} & \frac{u^{n,k+1} - u^{n-1}}{\Delta t} = \nabla\cdot (\dfc(u^{n,k})\nabla u^{n,k+1}) + f(u^{n,k})\nonumber\\ &\qquad + \nabla\cdot (\dfc^{\prime}(u^{n,k})\delta u\nabla u^{n,k}) + f^{\prime}(u^{n,k})\delta u\tp \end{align}\] Note that the first line is the same PDE as arises in the Picard iteration, while the remaining terms arise from the differentiations that are an inherent ingredient in Newton’s method.

5.20.3 Implementation

For coding we want to introduce \(u\) for \(u^n\), \(u^{-}\) for \(u^{n,k}\) and \(u^{(1)}\) for \(u^{n-1}\). The formulas for \(F\) and \(\delta F\) are then more clearly written as

\[ F(u^{-}) = \frac{u^{-} - u^{(1)}}{\Delta t} - \nabla\cdot (\dfc(u^{-})\nabla u^{-}) + f(u^{-}), \] \[ \begin{split} \delta F(\delta u; u^{-}) &= - \frac{1}{\Delta t}\delta u + \nabla\cdot (\dfc(u^{-})\nabla \delta u) + \\ &\quad \nabla\cdot (\dfc^{\prime}(u^{-})\delta u\nabla u^{-}) + f^{\prime}(u^{-})\delta u\tp \end{split} \tag{5.24}\] The form that orders the PDE as the Picard iteration terms plus the Newton method’s derivative terms becomes

\[\begin{align} & \frac{u - u^{(1)}}{\Delta t} = \nabla\cdot (\dfc(u^{-})\nabla u) + f(u^{-}) + \nonumber\\ &\qquad \gamma(\nabla\cdot (\dfc^{\prime}(u^{-})(u - u^{-})\nabla u^{-}) + f^{\prime}(u^{-})(u - u^{-}))\tp \end{align}\] The Picard and full Newton versions correspond to \(\gamma=0\) and \(\gamma=1\), respectively.

5.20.4 Derivation with alternative notation

Some may prefer to derive the linearized PDE for \(\delta u\) using the more compact notation. We start with inserting \(u^n=u^{-}+\delta u\) to get \[ \frac{u^{-} +\delta u - u^{n-1}}{\Delta t} = \nabla\cdot (\dfc(u^{-} + \delta u)\nabla (u^{-}+\delta u)) + f(u^{-}+\delta u)\tp \] Taylor expanding,

\[\begin{align*} \dfc(u^{-} + \delta u) & \approx \dfc(u^{-}) + \dfc^{\prime}(u^{-})\delta u,\\ f(u^{-}+\delta u) & \approx f(u^{-}) + f^{\prime}(u^{-})\delta u, \end{align*}\] and inserting these expressions gives a less cluttered PDE for \(\delta u\):

\[\begin{align*} \frac{u^{-} +\delta u - u^{n-1}}{\Delta t} &= \nabla\cdot (\dfc(u^{-})\nabla u^{-}) + f(u^{-}) + \\ &\qquad \nabla\cdot (\dfc(u^{-})\nabla \delta u) + \nabla\cdot (\dfc^{\prime}(u^{-})\delta u\nabla u^{-}) + \\ &\qquad \nabla\cdot (\dfc^{\prime}(u^{-})\delta u\nabla \delta u) + f^{\prime}(u^{-})\delta u\tp \end{align*}\]

5.21 Crank-Nicolson discretization

A Crank-Nicolson discretization of the nonlinear diffusion equation applies a centered difference at \(t_{n+\frac{1}{2}}\): \[ [D_t u = \nabla\cdot (\dfc(u)\nabla u) + f(u)]^{n+\frac{1}{2}}\tp \] The standard technique is to apply an arithmetic average for quantities defined between two mesh points, e.g., \[ u^{n+\frac{1}{2}}\approx \frac{1}{2}(u^n + u^{n+1})\tp \] However, with nonlinear terms we have many choices of formulating an arithmetic mean:

\[\begin{align} [f(u)]^{n+\frac{1}{2}} &\approx f(\frac{1}{2}(u^n + u^{n+1})) = [f(\overline{u}^t)]^{n+\frac{1}{2}},\\ [f(u)]^{n+\frac{1}{2}} &\approx \frac{1}{2}(f(u^n) + f(u^{n+1})) =[\overline{f(u)}^t]^{n+\frac{1}{2}},\\ [\dfc(u)\nabla u]^{n+\frac{1}{2}} &\approx \dfc(\frac{1}{2}(u^n + u^{n+1}))\nabla (\frac{1}{2}(u^n + u^{n+1})) = [\dfc(\overline{u}^t)\nabla \overline{u}^t]^{n+\frac{1}{2}},\\ [\dfc(u)\nabla u]^{n+\frac{1}{2}} &\approx \frac{1}{2}(\dfc(u^n) + \dfc(u^{n+1}))\nabla (\frac{1}{2}(u^n + u^{n+1})) = [\overline{\dfc(u)}^t\nabla\overline{u}^t]^{n+\frac{1}{2}},\\ [\dfc(u)\nabla u]^{n+\frac{1}{2}} &\approx \frac{1}{2}(\dfc(u^n)\nabla u^n + \dfc(u^{n+1})\nabla u^{n+1}) = [\overline{\dfc(u)\nabla u}^t]^{n+\frac{1}{2}}\tp \end{align}\]

A big question is whether there are significant differences in accuracy between taking the products of arithmetic means or taking the arithmetic mean of products. Exercise Section 5.41 investigates this question, and the answer is that the approximation is \(\Oof{\Delta t^2}\) in both cases.

5.22 Discretization in space and Newton’s method

Section Section 5.17 presented methods for linearizing time-discrete PDEs directly prior to discretization in space. We can alternatively carry out the discretization in space of the time-discrete nonlinear PDE problem and get a system of nonlinear algebraic equations, which can be solved by Picard iteration or Newton’s method as presented in Section Section 5.13. This latter approach will now be described in detail.

We shall work with the 1D problem \[ -(\dfc(u)u^{\prime})^{\prime} + au = f(u),\quad x\in (0,L), \quad \dfc(u(0))u^{\prime}(0) = C,\ u(L)=D \tp \tag{5.25}\]

The problem (5.25) arises from the stationary limit of a diffusion equation, \[ \frac{\partial u}{\partial t} = \frac{\partial}{\partial x}\left( \alpha(u)\frac{\partial u}{\partial x}\right) - au + f(u), \tag{5.26}\] as \(t\rightarrow\infty\) and \(\partial u/\partial t\rightarrow 0\). Alternatively, the problem (5.25) arises at each time level from implicit time discretization of (5.26). For example, a Backward Euler scheme for (5.26) leads to \[ \frac{u^{n}-u^{n-1}}{\Delta t} = \frac{d}{dx}\left( \alpha(u^n)\frac{du^n}{dx}\right) - au^n + f(u^n)\tp \tag{5.27}\] Introducing \(u(x)\) for \(u^n(x)\), \(u^{(1)}\) for \(u^{n-1}\), and defining \(f(u)\) in (5.25) to be \(f(u)\) in (5.27) plus \(u^{n-1}/\Delta t\), gives (5.25) with \(a=1/\Delta t\).

5.23 Finite difference discretization

The nonlinearity in the differential equation (5.25) poses no more difficulty than a variable coefficient, as in the term \((\dfc(x)u^{\prime})^{\prime}\). We can therefore use a standard finite difference approach when discretizing the Laplace term with a variable coefficient: \[ [-D_x\dfc D_x u +au = f]_i\tp \] Writing this out for a uniform mesh with points \(x_i=i\Delta x\), \(i=0,\ldots,N_x\), leads to

\[ -\frac{1}{\Delta x^2} \left(\dfc_{i+\half}(u_{i+1}-u_i) - \dfc_{i-\half}(u_{i}-u_{i-1})\right) + au_i = f(u_i)\tp \tag{5.28}\] This equation is valid at all the mesh points \(i=0,1,\ldots,N_x-1\). At \(i=N_x\) we have the Dirichlet condition \(u_i=0\). The only difference from the case with \((\dfc(x)u^{\prime})^{\prime}\) and \(f(x)\) is that now \(\dfc\) and \(f\) are functions of \(u\) and not only of \(x\): \((\dfc(u(x))u^{\prime})^{\prime}\) and \(f(u(x))\).

The quantity \(\dfc_{i+\half}\), evaluated between two mesh points, needs a comment. Since \(\dfc\) depends on \(u\) and \(u\) is only known at the mesh points, we need to express \(\dfc_{i+\half}\) in terms of \(u_i\) and \(u_{i+1}\). For this purpose we use an arithmetic mean, although a harmonic mean is also common in this context if \(\dfc\) features large jumps. There are two choices of arithmetic means:

\[ \dfc_{i+\half} \approx \dfc(\half(u_i + u_{i+1}) = [\dfc(\overline{u}^x)]^{i+\half}, \] \[ \dfc_{i+\half} \approx \half(\dfc(u_i) + \dfc(u_{i+1})) = [\overline{\dfc(u)}^x]^{i+\half} \tag{5.29}\] Equation (5.28) with the latter approximation then looks like

\[ \begin{split} &-\frac{1}{2\Delta x^2} \left((\dfc(u_i)+\dfc(u_{i+1}))(u_{i+1}-u_i) - (\dfc(u_{i-1})+\dfc(u_{i}))(u_{i}-u_{i-1})\right)\\ &\qquad\qquad + au_i = f(u_i), \end{split} \tag{5.30}\] or written more compactly, \[ [-D_x\overline{\dfc}^x D_x u +au = f]_i\tp \] At mesh point \(i=0\) we have the boundary condition \(\dfc(u)u^{\prime}=C\), which is discretized by \[ [\dfc(u)D_{2x}u = C]_0, \] meaning \[ \dfc(u_0)\frac{u_{1} - u_{-1}}{2\Delta x} = C\tp \tag{5.31}\] The fictitious value \(u_{-1}\) can be eliminated with the aid of (5.30) for \(i=0\). Formally, (5.30) should be solved with respect to \(u_{i-1}\) and that value (for \(i=0\)) should be inserted in (5.31), but it is algebraically much easier to do it the other way around. Alternatively, one can use a ghost cell \([-\Delta x,0]\) and update the \(u_{-1}\) value in the ghost cell according to (5.31) after every Picard or Newton iteration. Such an approach means that we use a known \(u_{-1}\) value in (5.30) from the previous iteration.

5.24 Solution of algebraic equations

5.24.1 The structure of the equation system

The nonlinear algebraic equations (5.30) are of the form \(A(u)u = b(u)\) with

\[\begin{align*} A_{i,i} &= \frac{1}{2\Delta x^2}(\dfc(u_{i-1}) + 2\dfc(u_{i}) \dfc(u_{i+1})) + a,\\ A_{i,i-1} &= -\frac{1}{2\Delta x^2}(\dfc(u_{i-1}) + \dfc(u_{i})),\\ A_{i,i+1} &= -\frac{1}{2\Delta x^2}(\dfc(u_{i}) + \dfc(u_{i+1})),\\ b_i &= f(u_i)\tp \end{align*}\] The matrix \(A(u)\) is tridiagonal: \(A_{i,j}=0\) for \(j > i+1\) and \(j < i-1\).

The above expressions are valid for internal mesh points \(1\leq i\leq N_x-1\). For \(i=0\) we need to express \(u_{i-1}=u_{-1}\) in terms of \(u_1\) using (5.31): \[ u_{-1} = u_1 -\frac{2\Delta x}{\dfc(u_0)}C\tp \tag{5.32}\] This value must be inserted in \(A_{0,0}\). The expression for \(A_{i,i+1}\) applies for \(i=0\), and \(A_{i,i-1}\) does not enter the system when \(i=0\).

Regarding the last equation, its form depends on whether we include the Dirichlet condition \(u(L)=D\), meaning \(u_{N_x}=D\), in the nonlinear algebraic equation system or not. Suppose we choose \((u_0,u_1,\ldots,u_{N_x-1})\) as unknowns, later referred to as systems without Dirichlet conditions. The last equation corresponds to \(i=N_x-1\). It involves the boundary value \(u_{N_x}\), which is substituted by \(D\). If the unknown vector includes the boundary value, \((u_0,u_1,\ldots,u_{N_x})\), later referred to as system including Dirichlet conditions, the equation for \(i=N_x-1\) just involves the unknown \(u_{N_x}\), and the final equation becomes \(u_{N_x}=D\), corresponding to \(A_{i,i}=1\) and \(b_i=D\) for \(i=N_x\).

5.24.2 Picard iteration

The obvious Picard iteration scheme is to use previously computed values of \(u_i\) in \(A(u)\) and \(b(u)\), as described more in detail in Section Section 5.13. With the notation \(u^{-}\) for the most recently computed value of \(u\), we have the system \(F(u)\approx \hat F(u) = A(u^{-})u - b(u^{-})\), with \(F=(F_0,F_1,\ldots,F_m)\), \(u=(u_0,u_1,\ldots,u_m)\). The index \(m\) is \(N_x\) if the system includes the Dirichlet condition as a separate equation and \(N_x-1\) otherwise. The matrix \(A(u^{-})\) is tridiagonal, so the solution procedure is to fill a tridiagonal matrix data structure and the right-hand side vector with the right numbers and call a Gaussian elimination routine for tridiagonal linear systems.

5.24.3 Mesh with two cells

It helps on the understanding of the details to write out all the mathematics in a specific case with a small mesh, say just two cells (\(N_x=2\)). We use \(u^{-}_i\) for the \(i\)-th component in \(u^{-}\).

The starting point is the basic expressions for the nonlinear equations at mesh point \(i=0\) and \(i=1\):

\[ A_{0,-1}u_{-1} + A_{0,0}u_0 + A_{0,1}u_1 = b_0, \tag{5.33}\] \[ A_{1,0}u_{0} + A_{1,1}u_1 + A_{1,2}u_2 = b_1\tp \tag{5.34}\] Equation (5.33) written out reads

\[\begin{align*} \frac{1}{2\Delta x^2}(& -(\dfc(u_{-1}) + \dfc(u_{0}))u_{-1}\, +\\ & (\dfc(u_{-1}) + 2\dfc(u_{0}) + \dfc(u_{1}))u_0\, -\\ & (\dfc(u_{0}) + \dfc(u_{1})))u_1 + au_0 =f(u_0)\tp \end{align*}\] We must then replace \(u_{-1}\) by (5.32). With Picard iteration we get

\[\begin{align*} \frac{1}{2\Delta x^2}(& -(\dfc(u^-**{-1}) + 2\dfc(u^-**{0}) + \dfc(u^-_{1}))u_1\, +\\ &(\dfc(u^-**{-1}) + 2\dfc(u^-**{0}) + \dfc(u^-_{1}))u_0 + au_0\\ &=f(u^-_0) - \frac{1}{\dfc(u^-**0)\Delta x}(\dfc(u^-**{-1}) + \dfc(u^-_{0}))C, \end{align*}\] where \[ u^-_{-1} = u_1^- -\frac{2\Delta x}{\dfc(u^-_0)}C\tp \] Equation (5.34) contains the unknown \(u_2\) for which we have a Dirichlet condition. In case we omit the condition as a separate equation, (5.34) with Picard iteration becomes

\[\begin{align*} \frac{1}{2\Delta x^2}(&-(\dfc(u^-**{0}) + \dfc(u^-**{1}))u_{0}\, + \\ &(\dfc(u^-**{0}) + 2\dfc(u^-**{1}) + \dfc(u^-_{2}))u_1\, -\\ &(\dfc(u^-**{1}) + \dfc(u^-**{2})))u_2 + au_1 =f(u^-_1)\tp \end{align*}\] We must now move the \(u_2\) term to the right-hand side and replace all occurrences of \(u_2\) by \(D\):

\[\begin{align*} \frac{1}{2\Delta x^2}(&-(\dfc(u^-**{0}) + \dfc(u^-**{1}))u_{0}\, +\\ & (\dfc(u^-**{0}) + 2\dfc(u^-**{1}) + \dfc(D)))u_1 + au_1\\ &=f(u^-**1) + \frac{1}{2\Delta x^2}(\dfc(u^-**{1}) + \dfc(D))D\tp \end{align*}\]

The two equations can be written as a \(2\times 2\) system: \[ \left(\begin{array}{cc} B_{0,0}& B_{0,1}\\ B_{1,0} & B_{1,1} \end{array}\right) \left(\begin{array}{c} u_0\\ u_1 \end{array}\right) = \left(\begin{array}{c} d_0\\ d_1 \end{array}\right), \] where

\[\begin{align} B_{0,0} &=\frac{1}{2\Delta x^2}(\dfc(u^-**{-1}) + 2\dfc(u^-**{0}) + \dfc(u^-_{1})) + a,\\ B_{0,1} &= -\frac{1}{2\Delta x^2}(\dfc(u^-**{-1}) + 2\dfc(u^-**{0}) + \dfc(u^-_{1})),\\ B_{1,0} &= -\frac{1}{2\Delta x^2}(\dfc(u^-**{0}) + \dfc(u^-**{1})),\\ B_{1,1} &= \frac{1}{2\Delta x^2}(\dfc(u^-**{0}) + 2\dfc(u^-**{1}) + \dfc(D)) + a,\\ d_0 &= f(u^-_0) - \frac{1}{\dfc(u^-**0)\Delta x}(\dfc(u^-**{-1}) + \dfc(u^-_{0}))C,\\ d_1 &= f(u^-**1) + \frac{1}{2\Delta x^2}(\dfc(u^-**{1}) + \dfc(D))D\tp \end{align}\]

The system with the Dirichlet condition becomes \[ \left(\begin{array}{ccc} B_{0,0}& B_{0,1} & 0\\ B_{1,0} & B_{1,1} & B_{1,2}\\ 0 & 0 & 1 \end{array}\right) \left(\begin{array}{c} u_0\\ u_1\\ u_2 \end{array}\right) = \left(\begin{array}{c} d_0\\ d_1\\ D \end{array}\right), \] with

\[\begin{align} B_{1,1} &= \frac{1}{2\Delta x^2}(\dfc(u^-**{0}) + 2\dfc(u^-**{1}) + \dfc(u_2)) + a,\\ B_{1,2} &= - \frac{1}{2\Delta x^2}(\dfc(u^-_{1}) + \dfc(u_2))),\\ d_1 &= f(u^-_1)\tp \end{align}\] Other entries are as in the \(2\times 2\) system.

5.24.4 Newton’s method

The Jacobian must be derived in order to use Newton’s method. Here it means that we need to differentiate \(F(u)=A(u)u - b(u)\) with respect to the unknown parameters \(u_0,u_1,\ldots,u_m\) (\(m=N_x\) or \(m=N_x-1\), depending on whether the Dirichlet condition is included in the nonlinear system \(F(u)=0\) or not). Nonlinear equation number \(i\) has the structure \[ F_i = A_{i,i-1}(u_{i-1},u_i)u_{i-1} + A_{i,i}(u_{i-1},u_i,u_{i+1})u_i + A_{i,i+1}(u_i, u_{i+1})u_{i+1} - b_i(u_i)\tp \] Computing the Jacobian requires careful differentiation. For example,

\[\begin{align*} \frac{\partial}{\partial u_i}(A_{i,i}(u_{i-1},u_i,u_{i+1})u_i) &= \frac{\partial A_{i,i}}{\partial u_i}u_i + A_{i,i} \frac{\partial u_i}{\partial u_i}\\ &= \frac{\partial}{\partial u_i}( \frac{1}{2\Delta x^2}(\dfc(u_{i-1}) + 2\dfc(u_{i}) +\dfc(u_{i+1})) + a)u_i +\\ &\quad\frac{1}{2\Delta x^2}(\dfc(u_{i-1}) + 2\dfc(u_{i}) +\dfc(u_{i+1})) + a\\ &= \frac{1}{2\Delta x^2}(2\dfc^\prime (u_i)u_i +\dfc(u_{i-1}) + 2\dfc(u_{i}) +\dfc(u_{i+1})) + a\tp \end{align*}\] The complete Jacobian becomes

\[\begin{align*} J_{i,i} &= \frac{\partial F_i}{\partial u_i} = \frac{\partial A_{i,i-1}}{\partial u_i}u_{i-1} + \frac{\partial A_{i,i}}{\partial u_i}u_i + A_{i,i} + \frac{\partial A_{i,i+1}}{\partial u_i}u_{i+1} - \frac{\partial b_i}{\partial u_{i}}\\ &= \frac{1}{2\Delta x^2}( -\dfc^{\prime}(u_i)u_{i-1} +2\dfc^{\prime}(u_i)u_{i} +\dfc(u_{i-1}) + 2\dfc(u_i) + \dfc(u_{i+1})) +\\ &\quad a -\frac{1}{2\Delta x^2}\dfc^{\prime}(u_{i})u_{i+1} - b^{\prime}(u_i),\\ J_{i,i-1} &= \frac{\partial F_i}{\partial u_{i-1}} = \frac{\partial A_{i,i-1}}{\partial u_{i-1}}u_{i-1} + A_{i-1,i} + \frac{\partial A_{i,i}}{\partial u_{i-1}}u_i - \frac{\partial b_i}{\partial u_{i-1}}\\ &= \frac{1}{2\Delta x^2}( -\dfc^{\prime}(u_{i-1})u_{i-1} - (\dfc(u_{i-1}) + \dfc(u_i)) + \dfc^{\prime}(u_{i-1})u_i),\\ J_{i,i+1} &= \frac{\partial A_{i,i+1}}{\partial u_{i-1}}u_{i+1} + A_{i+1,i} + \frac{\partial A_{i,i}}{\partial u_{i+1}}u_i - \frac{\partial b_i}{\partial u_{i+1}}\\ &=\frac{1}{2\Delta x^2}( -\dfc^{\prime}(u_{i+1})u_{i+1} - (\dfc(u_{i}) + \dfc(u_{i+1})) + \dfc^{\prime}(u_{i+1})u_i) \end{align*}\] The explicit expression for nonlinear equation number \(i\), \(F_i(u_0,u_1,\ldots)\), arises from moving the \(f(u_i)\) term in (5.30) to the left-hand side:

\[ \begin{split} F_i &= -\frac{1}{2\Delta x^2} \left((\dfc(u_i)+\dfc(u_{i+1}))(u_{i+1}-u_i) - (\dfc(u_{i-1})+\dfc(u_{i}))(u_{i}-u_{i-1})\right)\\ &\qquad\qquad + au_i - f(u_i) = 0\tp \end{split} \tag{5.35}\]

At the boundary point \(i=0\), \(u_{-1}\) must be replaced using the formula (5.32). When the Dirichlet condition at \(i=N_x\) is not a part of the equation system, the last equation \(F_m=0\) for \(m=N_x-1\) involves the quantity \(u_{N_x-1}\) which must be replaced by \(D\). If \(u_{N_x}\) is treated as an unknown in the system, the last equation \(F_m=0\) has \(m=N_x\) and reads \[ F_{N_x}(u_0,\ldots,u_{N_x}) = u_{N_x} - D = 0\tp \] Similar replacement of \(u_{-1}\) and \(u_{N_x}\) must be done in the Jacobian for the first and last row. When \(u_{N_x}\) is included as an unknown, the last row in the Jacobian must help implement the condition \(\delta u_{N_x}=0\), since we assume that \(u\) contains the right Dirichlet value at the beginning of the iteration (\(u_{N_x}=D\)), and then the Newton update should be zero for \(i=0\), i.e., \(\delta u_{N_x}=0\). This also forces the right-hand side to be \(b_i=0\), \(i=N_x\).

We have seen, and can see from the present example, that the linear system in Newton’s method contains all the terms present in the system that arises in the Picard iteration method. The extra terms in Newton’s method can be multiplied by a factor such that it is easy to program one linear system and set this factor to 0 or 1 to generate the Picard or Newton system.

5.25 Solving Nonlinear PDEs with Devito

Having established the finite difference discretization of nonlinear PDEs, we now implement several solvers using Devito. The symbolic approach allows us to express nonlinear equations and handle the time-lagged coefficients naturally.

5.25.1 Nonlinear Diffusion: The Explicit Scheme

The nonlinear diffusion equation \[ u_t = \nabla \cdot (D(u) \nabla u) \] with solution-dependent diffusivity \(D(u)\) requires special treatment. In 1D, the equation becomes: \[ u_t = \frac{\partial}{\partial x}\left(D(u) \frac{\partial u}{\partial x}\right) \]

For explicit time stepping, we evaluate \(D\) at the previous time level: \[ u^{n+1}_i = u^n_i + \frac{\Delta t}{\Delta x^2} \left[D^n_{i+1/2}(u^n_{i+1} - u^n_i) - D^n_{i-1/2}(u^n_i - u^n_{i-1})\right] \]

where \(D^n_{i+1/2} = \frac{1}{2}(D(u^n_i) + D(u^n_{i+1}))\).

5.25.2 The Devito Implementation

from devito import Grid, TimeFunction, Eq, Operator, Constant

import numpy as np

# Domain and discretization

L = 1.0 # Domain length

Nx = 100 # Grid points

T = 0.1 # Final time

F = 0.4 # Target Fourier number

dx = L / Nx

D_max = 1.0 # Maximum diffusion coefficient

dt = F * dx**2 / D_max # Time step from stability

# Create Devito grid

grid = Grid(shape=(Nx + 1,), extent=(L,))

# Time-varying field with space_order=2 for halo access

u = TimeFunction(name='u', grid=grid, time_order=1, space_order=2)5.25.3 Handling the Nonlinear Diffusion Coefficient

For nonlinear diffusion, the diffusivity depends on the solution. Common forms include:

| Type | \(D(u)\) | Application |

|---|---|---|

| Constant | \(D_0\) | Linear heat conduction |

| Linear | \(D_0(1 + \alpha u)\) | Temperature-dependent conductivity |

| Porous medium | \(D_0 m u^{m-1}\) | Flow in porous media |

The src.nonlin module provides several diffusion coefficient functions:

from src.nonlin import (

constant_diffusion,

linear_diffusion,

porous_medium_diffusion,

)

# Constant D(u) = 1.0

D_const = lambda u: constant_diffusion(u, D0=1.0)

# Linear D(u) = 1 + 0.5*u

D_linear = lambda u: linear_diffusion(u, D0=1.0, alpha=0.5)

# Porous medium D(u) = 2*u (m=2)

D_porous = lambda u: porous_medium_diffusion(u, m=2.0, D0=1.0)5.25.4 Complete Nonlinear Diffusion Solver

The src.nonlin module provides solve_nonlinear_diffusion_explicit:

from src.nonlin import solve_nonlinear_diffusion_explicit

import numpy as np

# Initial condition: smooth bump

def I(x):

return np.sin(np.pi * x)

result = solve_nonlinear_diffusion_explicit(

L=1.0, # Domain length

Nx=100, # Grid points

T=0.1, # Final time

F=0.4, # Fourier number

I=I, # Initial condition

D_func=lambda u: linear_diffusion(u, D0=1.0, alpha=0.5),

)

print(f"Final time: {result.t:.4f}")

print(f"Max solution: {result.u.max():.6f}")5.25.5 Reaction-Diffusion with Operator Splitting

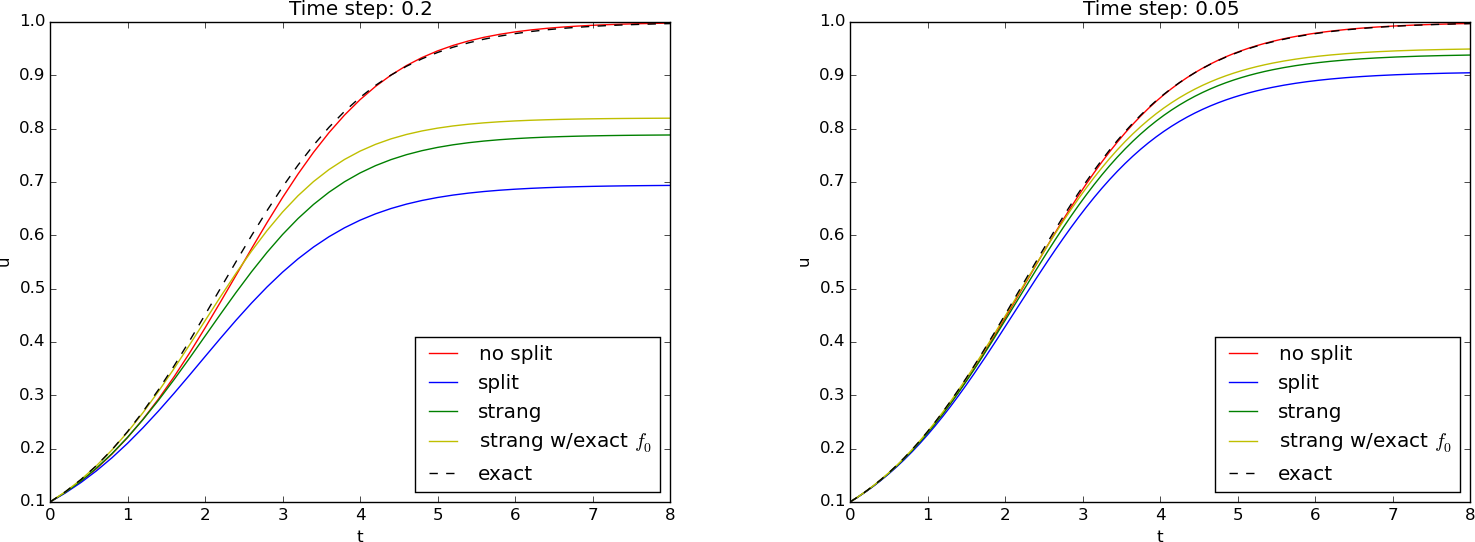

The reaction-diffusion equation \[ u_t = a u_{xx} + R(u) \] combines diffusion with a nonlinear reaction term. Operator splitting separates these effects:

Lie Splitting (first-order): 1. Solve \(u_t = a u_{xx}\) for time \(\Delta t\) 2. Solve \(u_t = R(u)\) for time \(\Delta t\)

Strang Splitting (second-order): 1. Solve \(u_t = R(u)\) for time \(\Delta t/2\) 2. Solve \(u_t = a u_{xx}\) for time \(\Delta t\) 3. Solve \(u_t = R(u)\) for time \(\Delta t/2\)

5.25.6 Reaction Terms

The module provides common reaction terms:

from src.nonlin import (

logistic_reaction,

fisher_reaction,

allen_cahn_reaction,

)

# Logistic growth: R(u) = r*u*(1 - u/K)

R_logistic = lambda u: logistic_reaction(u, r=1.0, K=1.0)

# Fisher-KPP: R(u) = r*u*(1 - u)

R_fisher = lambda u: fisher_reaction(u, r=1.0)

# Allen-Cahn: R(u) = u - u^3

R_allen_cahn = lambda u: allen_cahn_reaction(u, epsilon=1.0)5.25.7 Reaction-Diffusion Solver

from src.nonlin import solve_reaction_diffusion_splitting

# Initial condition with small perturbation

def I(x):

return 0.5 * np.sin(np.pi * x)

# Strang splitting (second-order)

result = solve_reaction_diffusion_splitting(

L=1.0,

a=0.1, # Diffusion coefficient

Nx=100,

T=0.5,

F=0.4,

I=I,

R_func=lambda u: fisher_reaction(u, r=1.0),

splitting="strang",

)The Strang splitting achieves second-order accuracy in time, while Lie splitting is only first-order. For problems with fast reactions or long simulation times, the higher accuracy of Strang splitting is beneficial.

5.25.8 Burgers’ Equation

The viscous Burgers’ equation \[ u_t + u u_x = \nu u_{xx} \] is a prototype for nonlinear advection with viscous dissipation. The nonlinear term \(u u_x\) can cause shock formation for small \(\nu\).

We use the conservative form \((u^2/2)_x\) with centered differences:

from src.nonlin import solve_burgers_equation

result = solve_burgers_equation(

L=2.0, # Domain length

nu=0.01, # Viscosity

Nx=100, # Grid points

T=0.5, # Final time

C=0.5, # Target CFL number

)5.25.9 Stability for Burgers’ Equation

The time step must satisfy both the CFL condition for advection: \[ C = \frac{|u|_{\max} \Delta t}{\Delta x} \le 1 \]

and the diffusion stability condition: \[ F = \frac{\nu \Delta t}{\Delta x^2} \le 0.5 \]

The solver automatically chooses \(\Delta t\) to satisfy both conditions with a safety factor.

5.25.10 The Effect of Viscosity

import matplotlib.pyplot as plt

fig, axes = plt.subplots(1, 2, figsize=(12, 5))

for ax, nu in zip(axes, [0.1, 0.01]):

result = solve_burgers_equation(

L=2.0, nu=nu, Nx=100, T=0.5, C=0.3,

I=lambda x: np.sin(np.pi * x),

save_history=True,

)

for i in range(0, len(result.t_history), len(result.t_history)//5):

ax.plot(result.x, result.u_history[i],

label=f't = {result.t_history[i]:.2f}')

ax.set_xlabel('x')

ax.set_ylabel('u')

ax.set_title(f'Burgers, nu = {nu}')

ax.legend()Higher viscosity (\(\nu = 0.1\)) smooths the solution, while lower viscosity (\(\nu = 0.01\)) allows steeper gradients to develop.

5.25.11 Picard Iteration for Implicit Schemes

For stiff nonlinear problems, implicit time stepping may be necessary. Picard iteration solves the nonlinear system by repeated linearization:

- Guess \(u^{n+1, (0)} = u^n\)

- For \(k = 0, 1, 2, \ldots\):

- Evaluate \(D^{(k)} = D(u^{n+1, (k)})\)

- Solve the linear system for \(u^{n+1, (k+1)}\)

- Check convergence: \(\|u^{n+1, (k+1)} - u^{n+1, (k)}\| < \epsilon\)

from src.nonlin import solve_nonlinear_diffusion_picard

result = solve_nonlinear_diffusion_picard(

L=1.0,

Nx=50,

T=0.05,

dt=0.001, # Can use larger dt than explicit

)The implicit scheme removes the time step restriction but requires solving a linear system at each iteration.

5.25.12 Summary

Key points for nonlinear PDEs with Devito:

- Nonlinear diffusion: Use explicit scheme with lagged coefficient evaluation and Fourier number \(F \le 0.5\)

- Operator splitting: Separates diffusion and reaction for reaction-diffusion equations; Strang is second-order

- Burgers’ equation: Requires both CFL and diffusion stability conditions; viscosity controls smoothness